The Decomposed Prompt Pattern

Part 3 — Architectural Patterns for Generative AI

Okay, so when you’re starting out with big language models(LLM), you might think that if you write one really long and smart prompt, it will give you a perfect answer.

Sometimes it works, but often, it doesn’t.

When prompts get complicated, the results can be hit or miss, cost more, and be tough to control. The model can start missing the point, make things up, or blur the lines between what it should and shouldn’t be doing.

But here’s another way to think about it:

Instead of trying to make prompts super smart, just make them smaller and simpler.

This is where the Decomposed Prompt Pattern comes in. It’s like breaking down a big prompt into smaller chunks, where each chunk has its own job and doesn’t need a ton of background info.

Context

Today’s AI uses LLMs for a lot, like:

Thinking through tricky problems

Making good content

Doing things step by step

Teaming up with other tools

But, we also know some things about LLMs that aren’t so great:

The longer a prompt, the more it costs.

Big prompts can lead to the model making stuff up.

If a prompt tries to do too much, it gets confused.

Trying to do everything in one go usually doesn’t work out.

Think of it like this: If you ask one thing to do too much, it’s going to crack under the pressure.

Problem

A prompt often has to:

Get what you’re asking.

Figure out how to solve it.

Write something.

Check if it’s good.

Fix any mess ups.

This can cause:

Answers that aren’t consistent.

Trouble figuring out what went wrong.

It’s not clear where things failed.

Prompts that are hard to change.

If something messes up, you won’t know what part caused the issue.

Forces

When you’re making complex things with LLMs, you’ll notice these things:

A single prompt can be easy to break. The longer they are, the harder they are to manage and test.

You need to check the quality more than once. Just checking the answer isn’t enough. It’s good to check the thinking process along the way.

One ask from a user can mean a lot of different tasks. It might need planning, writing, checking, and using other tools. Trying to do it all at once in one prompt can be a headache.

There’s only so much space in a prompt, and it costs money. Cramming everything in makes it expensive and can confuse the model. Smaller prompts tend to work better.

You need steps that you can expect and test. Splitting things up lets you check each part, try again if one fails, and see where things went wrong.

All of this makes you want to move away from one huge prompt and toward a system where each part has one job and isn’t overwhelmed with context.

Solution — The Decomposed Prompt Pattern

The Decomposed Prompt Pattern is all about taking one big ask and splitting it into smaller or specialized prompts that each has its own thing to focus on.

Sometimes, this looks like a pipeline, but the point isn’t just to make a pipeline.

The real win is that you’re cutting down a complex prompt into smaller, more focused prompts, where each one has:

A small goal

Only needs a bit of context

Knows what it’s putting in and getting out

How it’s put together (one after the other, at the same time, or a mix) doesn’t really matter.

You’re changing how it thinks, not always the words of the prompt itself.

Responsibility should be separated.

Dependent Prompts

When following prompts, each step uses what came before it.

This is helpful when:

What comes later needs the thinking from what came earlier.

Things get better each time around.

You want to fine tune as you go.

Example

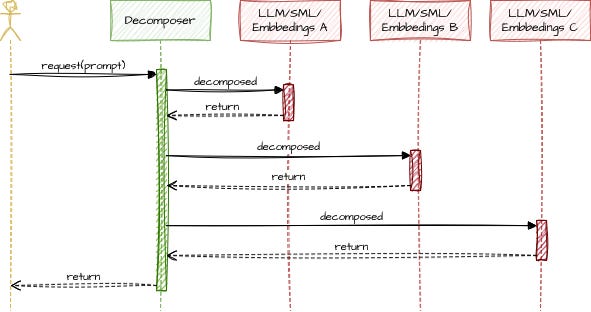

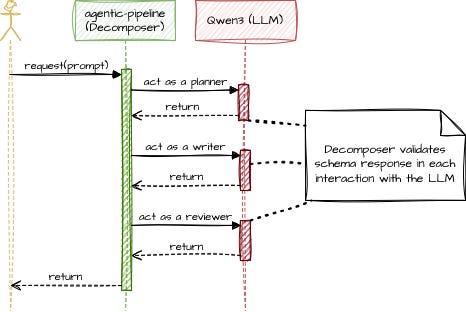

I’ve applied this pattern in a small experiment (available in my GitHub repo) where a service receives a prompt and processes it through three specialized components:

Planner: breaks the request into a sequence of steps.

Writer: Produces a first draft based on the plan.

Reviewer: Reviews the draft and improves clarity, structure, and quality.

Each prompt is simpler, and more focused than a single “mega-prompt”.

The result is not just better output —

it’s a system that is easier to reason about, debug, and evolve.

Independent Prompts

Sometimes, prompts don’t need to rely on each other.

Prompts that aren’t connected are good when:

You want a few different points of view.

You can compare the results.

Steps can happen at the same time.

Examples:

Making a few drafts

Checking results against different things

Running safety or quality checks

This means you can do things at the same time, get better speed, and try some parts again without having to start over.

Benefits

Using the Decomposed Prompt Pattern gives you:

Outcomes you can expect

Smaller prompts that cost less

Less chance of the model making stuff up

Responsibilities that are obvious

Easier way to fix things

Better success as the system grows

It also goes well with things like Guardrails and RAG.

Trade-offs

It’s not all easy.

You’ll have to deal with:

More complicated steps

More things to keep track of

Figuring out how the prompts connect

Deciding what should rely on what

But the alternative is a crazy big prompt that becomes a nightmare real quick.

From my experience, it’s a good deal to make once things get past the testing phase.

Closing Thoughts

Large prompts feel powerful — until they don’t.

Breaking a problem into smaller prompts may feel slower at first, but it gives you something far more valuable: control.

The Decomposed Prompt Pattern treats prompting as architecture.

And once you see prompts as components, everything starts to click.

If you’ve tried similar approaches — or struggled with overly complex prompts — I’d love to hear about it.

Thanks for writing this, it clarifies a lot, and it truly makes sens that breaking down complex prompts into smaller tasks is more reliable, although I sometimes ponder the cumulative latency from sequential API calls.